Abstract

Background

This study aimed to gain insight into the optimal spacing in time for visual field tests for progression detection in glaucoma.

Methods

Three perimetric strategies for progression detection were compared by means of simulation experiments in a theoretical cohort. In strategies 1 and 2, visual field testing was performed with fixed-spaced inter-test intervals, using intervals of 3 and 6 months respectively. In strategy 3, the inter-test interval was kept at 1 year as long as the fields appeared unchanged. Then, as soon as progression was suspected, confirmation or falsification were performed promptly. Follow-up fields were compared against a baseline assuming linear deterioration, using various progression criteria. Outcome measures were: (1) specificity, (2) time delay until the diagnosis of definite progression, and (3) number of required tests.

Results

Strategies 2 and 3 had a higher specificity than strategy 1. Strategies 1 and 3 detected progression earlier than strategy 2. The number of required visual field tests was lowest for strategy 3.

Conclusion

Perimetry in glaucoma can be optimised by postponing the next test under apparently stable field conditions and bringing the next test forward once progression is suspected.

Similar content being viewed by others

Introduction

Perimetry is currently the most widely used diagnostic technique for the detection of progression in glaucoma. Traditionally, perimetry is performed at fixed-spaced inter-test intervals. Inter-test intervals of 3 months have been shown to be optimal [1, 2], while intervals of 6 months are considered an acceptable compromise between information yield and costs [3].

The fixed-spaced nature of inter-test intervals is not grounded in firm evidence. In fact, a recent study has suggested that fixed-spaced inter-test intervals are not optimal [4]. It was argued in that study that the next test could be postponed in the case of an apparently stable field, and should be brought forward once progression is suspected. This so-called adaptive testing should allow for earlier diagnosis and a lower overall perimetric frequency. The superior performance of this adaptive approach arises as a consequence of Bayes’ theorem - due to the low prior probability of progression, apparent stability needs less confirmation than suspected progression [5].

The above-mentioned study took the form of a thought experiment assuming glaucomatous deterioration to be a process occurring stepwise. Whether glaucomatous deterioration is a stepwise or a continuous process has not yet been settled. Stepwise progression has been reported, but arguments in favour of continuous loss have also been published [6–8].

The aim of the present study is to investigate whether adaptive testing is more efficient than fixed-spaced inter-test interval testing for glaucomatous deterioration occurring continuously. Three perimetric strategies were compared for this purpose. In strategies 1 and 2, testing was performed with fixed-spaced inter-test intervals using intervals of 3 and 6 months respectively. In strategy 3, the inter-test interval was kept at 1 year as long as the fields appeared unchanged. Then, as soon as progression was suspected, confirmation or falsification were performed promptly. These three strategies were compared by means of simulation experiments in a theoretical cohort assuming linear deterioration, using two different progression criteria, one based on nonparametric ranking [5], and the other on the AGIS criterion [9]. Outcome measures were: (1) specificity, (2) time delay until the diagnosis of definite progression, and (3) number of required tests.

Materials and methods

A visual field test result was represented by a single real number x. This number could, for instance, represent a global index parameter such as mean deviation (MD) or the AGIS score [9, 10]. Perimetric variability was modelled by adding a normally distributed random number e with mean 0 and standard deviation SD to x. In this study, 4,000 independent series of normally distributed random numbers were generated using ASYST 3.10 (Asyst Software Technologies, Rochester, NY, USA) to construct 4,000 series of visual field test results. This large number was chosen in order to minimize the influence of chance on the final results. Deterioration was modelled as a linear decay at rate r. Hence:

where t is the time since the start of the follow-up. Calculations were performed in stable series—i.e., with r = 0—to assess specificity, and with three different rates of glaucomatous deterioration: r = 0.5, 1.0, and 2.0 SD/yr. These values roughly correspond to 1, 2 and 4 dB/yr loss of MD [8]—i.e., an SD corresponds to 2 dB. Rates of 1–2 dB/yr are typical of glaucoma patients with progression [8]; 4 dB/yr was added in order to study the performance of the various strategies in patients with a higher than average rate of progression.

A test result worse than a predefined baseline was denoted as suspected progression. For the diagnosis of definite progression, two confirmations of this suspected progression were required—i.e., three consecutive follow-up fields had to have scores worse than the predefined baseline. In criterion I, the predefined baseline was defined as the worse score of two baseline fields [5]. In criterion II, the predefined baseline was defined using an offset D from a reference baseline field [9]. For AGIS, D = 1.6 SD (see Discussion) [9].

Calculations were performed for three different strategies for visual field test spacing. In strategies 1 and 2, testing was performed with fixed-spaced inter-test intervals using intervals of 3 and 6 months respectively. In strategy 3, the inter-test interval was kept at one year (see Discussion) as long as the fields appeared unchanged. Then, as soon as progression was suspected, confirmation or falsification were performed promptly.

Results

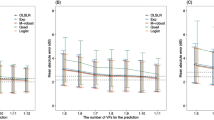

Figure 1 shows the proportion of the cohort with definite progression as a function of follow-up time for strategies 1–3 for stable series (a; r = 0) and for three different rates of progression (b; r = 0.5, 1.0 and 2.0 SD/yr), using criterion I. Specificity was lowest for strategy 1—i.e., strategy 1 had the highest incidence rate of definite progression for stable fields (Fig. 1a). Strategy 2 yielded a delayed diagnosis of definite progression when compared with strategies 1 and 3 (Fig. 1b). Figure 2 presents the corresponding results using criterion II with offset D = 1.6 SD. Again, specificity was lowest for strategy 1 (Fig. 2a) and strategy 2 showed a delayed diagnosis of definite progression (Fig. 2b).

Proportion of the cohort with diagnosed definite progression as a function of the follow-up period for stable series of fields (a) and for series with three different rates of glaucomatous deterioration: 0.5, 1.0 and 2.0 SD/yr (b), for strategies 1 ( ), 2 (

), 2 ( ) and 3 (

) and 3 ( ), using criterion I (nonparametric ranking)

), using criterion I (nonparametric ranking)

Proportion of the cohort with diagnosed definite progression as a function of the follow-up period for stable series of fields (a) and for series with three different rates of glaucomatous deterioration: 0.5, 1.0 and 2.0 SD/yr (b), for strategies 1 ( ), 2 (

), 2 ( ) and 3 (

) and 3 ( ), using criterion II (AGIS criterion)

), using criterion II (AGIS criterion)

Table 1 shows the average number of tests performed up to the diagnosis of definite progression for all three strategies for both stable series (r = 0) and three different rates of progression (r = 0.5, 1.0 and 2.0 SD/yr), using criterion I. Where a series did not reach definite progression within the 4-year follow-up, the number of tests performed during the entire follow-up period was counted. Table 2 presents the corresponding results using criterion II with offset D = 1.6 SD. In all situations, the lowest number of tests was required when using strategy 3.

Discussion

In this study, three perimetric strategies for progression detection in glaucoma were compared; one with a fixed-spaced inter-test interval of 3 months (1), one with a fixed-spaced inter-test interval of 6 months (2) and one with adaptive inter-test intervals (3). Strategy 1 had the lowest specificity. Strategy 2 detected progression later than strategies 1 and 3. The lowest number of tests was required for strategy 3.

The differences in the numbers of required visual field tests in the 4-year period for the three different strategies were most apparent for r = 0, i.e., for patients without deterioration (Tables 1 and 2). Since in reality only a minority of glaucoma patients displays progression [13], the overall perimetric frequency will mainly be determined by the stable patients. Hence, strategy 3 does in fact have the lowest overall perimetric frequency.

The offset D = 1.6 SD as used in the current study was based on AGIS [9]. A comparison between Figs. 1 and 2 clearly reveals a higher specificity and a lower sensitivity for criterion II when compared to criterion I. This finding in simulation experiments is in agreement with analyses of measured patient data using various criteria for progression, demonstrating a high specificity and a low progression rate for the AGIS criterion [11, 12].

The original AGIS threshold was 4 AGIS units and the average perimetric variability within the AGIS study population was 2.5 units [9], together resulting in the 1.6 SD threshold as used in this study. In reality, some patients display above average variability whereas others display below average. For that reason, situations with above and below average perimetric variabilities were also explored. Within a realistic range of perimetric variabilities, strategy 3—i.e., the strategy with adaptive inter-test intervals, remained the most efficient (data not shown).

An implicit assumption in this study was that each visual field test performed could be scored—i.e., each test was assumed to be reliable. Obviously, this is not the case in clinical reality, and unreliable tests have to be repeated. Hence, more tests may be needed in reality as compared to theory. It is reasonable to assume, however, that the proportion of unreliable tests does not depend on the strategy applied. Thus, also in the presence of unreliable tests, strategy 3 will remain the most efficient approach.

The interval of one year used here in the adaptive approach was based on two facts: (1) that it takes on average 1 year to build up a prior probability of progression of 10%, and (2) that it is virtually impossible to detect progression with a prior probability of less than 10% in clinical practice [5, 13]. In poorly regulated patients, the 10% prior probability of progression will be reached within a year; therefore, a shorter inter-test interval should be used. Conversely, some other patients may be sent home safely for longer than a year. Generally, known risk factors for progression may be incorporated into the model by adjusting the inter-test interval of the adaptive approach. Disease stage (MD, AGIS score) could be such a risk factor [14].

As mentioned in the Introduction, it is largely unknown whether glaucomatous deterioration is a continuous or a stepwise process. This question cannot easily be solved, because the phenotype of continuous loss may resemble that of stepwise progression, and vice versa, due to perimetric variability and discrete sampling. Both types of progression have been reported [6, 7]. The current study showed adaptive testing to be the preferred approach for continuous loss; an earlier study yielded the same conclusion for stepwise progression [4]. Therefore, it seems reasonable to conclude that adaptive testing is the most efficient approach irrespective of what the exact underlying nature of glaucomatous deterioration is.

In some cases, especially in elderly patients with early glaucoma, it may be of more importance to monitor the rate of progression than the occurrence of any progression. This can also be achieved using adaptive testing - small changes may be accepted without a prompt confirmation. Where a change occurs that could exceed the acceptable rate of progression, however, the next test should be brought forward. After all, fixed-spaced inter-test intervals are not a prerequisite for slope determination (linear regression can be applied to any set of datapoints without the need to assume that the points are equally spaced along the x-axis).

In the case of new patients, it cannot be known in advance whether they represent stable or deteriorating cases. As a consequence, adaptive testing cannot be applied from the outset. Perimetry in a new patient has to start at a relatively high perimetric frequency, such as one visual field test every 3 months. As soon as stability has been shown, a switch can be made to adaptive testing.

Finally, it should be emphasized that the findings as presented here are by no means specific to perimetry. As a consequence, these findings can be applied to the monitoring of any variable that has normal variability, both within and outside the field of glaucoma. The only prerequisite is that the prior probability of change is small.

In conclusion, perimetry in glaucoma can be made more efficient by postponing the next test under apparently stable field conditions (typically 1 year) and bringing the next test forward once progression is suspected.

References

Viswanathan AC, Hitchings RA, Fitzke FW (1997) How often do patients need visual field tests? Graefes Arch Clin Exp Ophthalmol 235:563–568

Gardiner SK, Crabb DP (2002) Frequency of testing for detecting visual field progression. Br J Ophthalmol 86:560–564

European Glaucoma Society (2003) Terminology and guidelines for glaucoma, 2nd edn. Dogma, Savona

Jansonius NM (2006) Towards an optimal perimetric strategy for progression detection in glaucoma: from fixed-space to adaptive inter-test intervals. Graefes Arch Clin Exp Ophthalmol 244:390–393

Jansonius NM (2005) Bayes’ theorem applied to perimetric progression detection in glaucoma: from specificity to positive predictive value. Graefes Arch Clin Exp Ophthalmol 243:433–437

Mikelberg FS, Schulzer M, Drance SM, Lau W (1986) The rate of progression of scotomas in glaucoma. Am J Ophthalmol 101:1–6

McNaught AI, Crabb DP, Fitzke FW, Hitchings RA (1995) Modelling series of visual fields to detect progression in normal-tension glaucoma. Graefes Arch Clin Exp Ophthalmol 233:750–755

Smith SD, Katz J, Quigley HA (1996) Analysis of progressive change in automated visual fields in glaucoma. Invest Ophthalmol Vis Sci 37:1419–1428

AGIS investigators (1994) Advanced glaucoma intervention study 2: visual field test scoring and reliability. Ophthalmology 101:1445–1455

Heijl A, Lindgren G, Olsson J (1986) A package for the statistical analysis of visual fields. Doc Ophthalmol Proc Ser 49:153–168

Katz J, Congdon N, Friedman DS (1999) Methodological variations in estimating apparent progressive visual field loss in clinical trials of glaucoma treatment. Arch Ophthalmol 117:1137–1142

Vesti E, Johnson CA, Chauhan BC (2003) Comparison of different methods for detecting glaucomatous visual field progression. Invest Ophthalmol Vis Sci 44:3873–3879

Heijl A, Leske MC, Bengtsson B, Hyman L, Bengtsson B, Hussein M, EMGT group (2002) Reduction of intraocular pressure and glaucoma progression. Arch Ophthalmol 120:1268–1279

Leske MC, Heijl A, Hussein M, Bengtsson B, Hyman L, Komaroff E, EMGT group (2003) Factors for glaucoma progression and the effect of treatment: the early manifest glaucoma trial. Arch Ophthalmol 121:48–56

Author information

Authors and Affiliations

Corresponding author

Additional information

Conflict of interest: none. The author has full control of all primary data, and agrees to allow Graefe’s Archive to review the data upon request.

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Jansonius, N.M. Progression detection in glaucoma can be made more efficient by using a variable interval between successive visual field tests. Graefes Arch Clin Exp Ophthalmol 245, 1647–1651 (2007). https://doi.org/10.1007/s00417-007-0576-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00417-007-0576-7